In Part 1 of this blog we went into a deep dive that analyzed all of the images stored in Docker Hub, the world’s largest container registry. We did this to give you a better understanding of how our new Terms of Service updates will impact development teams who use Docker Hub to manage their container images and CI/CD pipelines.

Part 2 of this blog post takes a deep dive into rate limits for container image pulls. This was also announced as part of our updated Docker Terms of Service (ToS) communications. We detailed the following pull rate limits to Docker subscription plans that will take effect November 1, 2020:

- Free plan – anonymous users: 100 pulls per 6 hours

- Free plan – authenticated users: 200 pulls per 6 hours

- Pro plan – 50,000 pulls in a 24 hour period

- Team plan – 50,000 pulls in a 24 hour period

Docker defines pull rate limits as the number of manifest requests to Docker Hub. Rate limits for Docker image pulls are based on the account type of the user requesting the image – not the account type of the image’s owner. For anonymous (unauthenticated) users, pull rates are limited based on the individual IP address.

We’ve been getting questions from customers and the community regarding container image layers. We are not counting image layers as part of the pull rate limits. Because we are limiting on manifest requests, the number of layers (blob requests) related to a pull is unlimited at this time. This is a change based on community feedback in order to be more user-friendly, so users do not need to count layers on each image they may be using.

A deeper look at Docker Hub pull rates

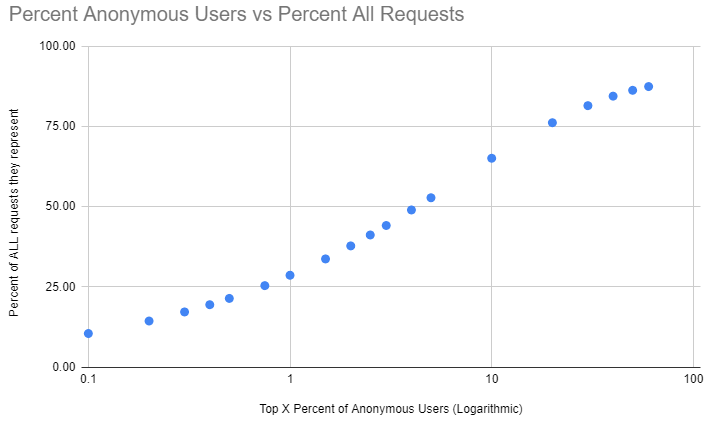

In determining why rate limits were necessary and how to apply them, we spent considerable time analyzing image downloads from Docker Hub. What we found confirmed that the vast majority of Docker users pulled images at a rate you would expect for normal workflows. However, there is an outsized impact from a small number of anonymous users. For example, roughly 30% of all downloads on Hub come from only 1% of our anonymous users.

The new pull limits are based on this analysis, such that most of our users will not be impacted. These limits are designed to accommodate normal use cases for developers – learning Docker, developing code, building images, and so forth.

Helping developers understand pull rate limits

Now that we understood the impact and where the limits should land, we needed to define at a technical level how these limits should work. Limiting image pulls to a Docker registry is complicated. You won’t find a pull API in the registry specification – it doesn’t exist. In fact, an image pull is actually a combination of manifest and blob API requests, and these are done in different patterns depending on the client state and the image in question.

For example, if you already have the image, the Docker Engine client will issue a manifest request, realize it has all of the referenced layers based on the returned manifest, and stop. On the other hand, if you are pulling an image that supports multiple architectures, a manifest request will be issued and return a list of image manifests for each supported architecture. The Docker Engine will then issue another specific manifest request for the architecture it’s running on, and receive a list of all the layers in that image. Finally, it will request each layer (blob) it is missing.

So an image pull is actually one or two manifest requests, and zero to infinite blob (layer) requests. Historically, Docker monitored rate limits based on blobs (layers). This was because a blob most closely correlated with bandwidth usage. However, we listened to feedback from the community that this is difficult to track, leads to an inconsistent experience depending on how many layers the image you are pulling has, discourages good Dockerfile practices, and is not intuitive for users who just want to get stuff done without being experts on Docker images and registries.

As such, we are rate limiting based on manifest requests moving forward. This has the advantage of being more directly coupled with a pull, so it is easy for users to understand. There is a small tradeoff – if you pull an image you already have, this is still counted even if you don’t download the layers. Overall, we hope this method of rate limiting is both fair and user-friendly.

We welcome your feedback

We will be monitoring and adjusting these limits over time based on common use cases to make sure the limits are appropriate for each tier of user, and in particular, that we are never blocking developers from getting work done.

Stay tuned in the coming weeks for a blog post about configuring CI and production systems in light of these changes.

Finally, as part of Docker’s commitment to the open source community, before November 1 we will be announcing availability of new open source plans. To apply for an open source plan, please complete the short form here.

For more information regarding the recent terms of service changes, please refer to the FAQ.

For users that need higher image pull limits, Docker also offers 50,000 pulls in a 24 hour period as a feature of the Pro and Team plans. Visit www.docker.com/pricing to view the available plans.

As always, we welcome your questions and feedback at [email protected].

Feedback

0 thoughts on "Scaling Docker to Serve Millions More Developers: Network Egress"